Dear Sydney...

This is a most excellent place for technology news and articles.

Dear Sydney...

https://www.youtube.com/watch?v=wfEEAfjb8Ko

Now we need the machine to write a handwritten letter, and sign it. To complete the effect of genuine human connection

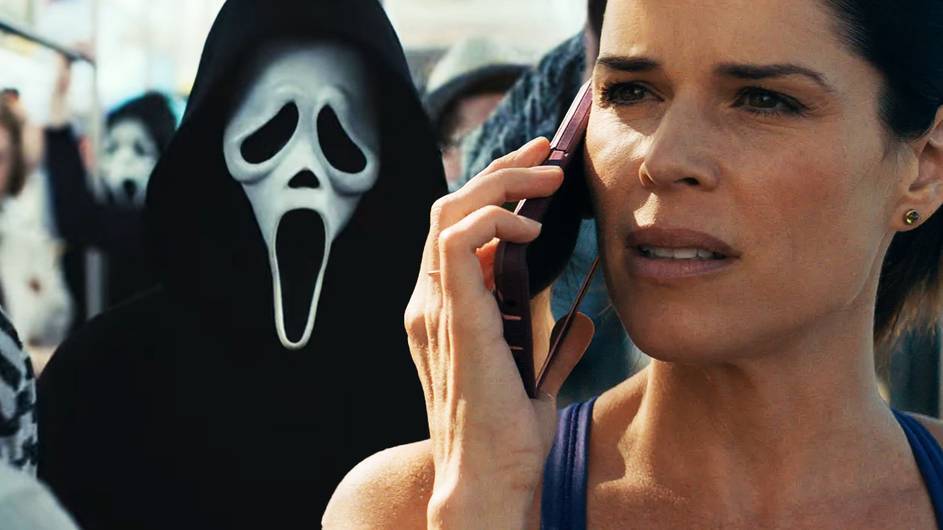

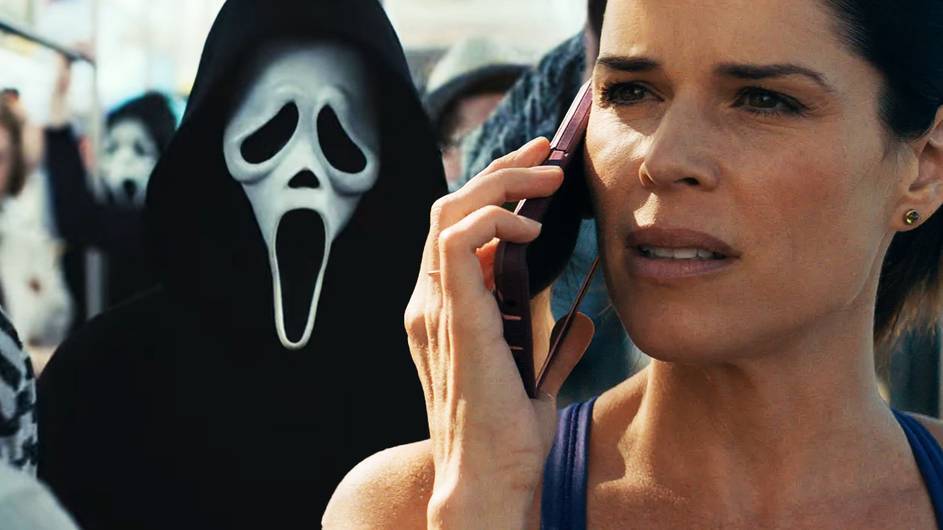

This and the Nike ad have been the worst ads during the Olympics.

I saw a movie the other day, and all of the ads before the previews were about AI. It was awful, and I hated it. One of them was this one, and yes... Terrible.

I saw a similar ad in theaters this week, it started by asking Gemini to write a breakup letter and I thought my friend next to me was going to cry because she's going through a breakup but then right at the end it goes "...to my old phone, because the Pixel 9 is just so cool!"

Gemini is awesome, I use it all the time for applied algebra and coding but using it to replace human emotions is not awesome. Google can do better

I've been watching quite a lot of Olympics coverage on TV, but never seen any ads. Is there an official Olympics TV channel with these ads?

Being a non native English speaker this is actually one of the better uses of LLMs for me. When I need to write in "fancier" English I ask LLMs and use it as an initial point (sometimes end up doing heavy modifications sometimes light). I mean this is one of the more logical uses of LLM, it is good at languages (unlike trying to get it to solve math problems).

And I dont agree with the pov that just because you use LLM output to find a good starting point it stops being personal.

The problem with this is that effectively you aren’t speaking anymore, the bot does for you. And if on the other side someone does not read anymore (the bot does it for them) then we are in very bizarre situation where all sorts of crazy shit starts to happen that never did.

You will ‚say’ something you didn’t mean at all, they will ‚read’ something that wasn’t there. The very language, communication collapses.

If everyone relies on it this will lead to total paralysis of society because the tool is flawed but in such a way that is not immediately apparent until it is everywhere, processes its own output and chokes on the garbage it produces.

It wouldn’t be so bad if it was immediately apparent but it seems so helpful and nice what can go wrong