The bots can have Reddit. I'm happy here

Hope their advertisers are happy serving to bots

The bots can have Reddit. I'm happy here

Hope their advertisers are happy serving to bots

Sorry, but as an AI language model, I cannot buy products or spend money on your company's services.

God I wish I could upvote this, but after about 5 minutes of trying to get it to stick, I will leave this comment instead. Well played ;)

Sorry, but as a meat popsicle, I'm not interested in commenting on your site.

Check out these two top level comments, five minutes apart. It's like comment(); sleep(300); comment().

I studied bot patterns on Reddit for a few years while using the site and was active in their takedown. My username is the same on there if you want to see the history of my involvement. What drove me to stop being so involved in bot takedowns is the extent to which Reddit as a site was continually redesigned to favor bots. In fact, I woke up today to a 3 day suspension for reporting a spam bot as a spam bot. I think what we need to examine in these cases, if possible, is if the bots were made strictly for the purpose of contesting blackouts (i.e. by Reddit themselves) or if they were made by a hobbyist or spammer. Given that these are on r/programming, that makes it seem more likely that a hobbyist programmer made these bots for a laugh or something, rather than it being an inside job. If the usual resources of Reddit’s API were accessible enough to provide a total history of these bot accounts’ posts and comments, then that would help to clarify (this is what I mean about Reddit redesigns favoring bots). On the subject, I think Lemmy needs to start implementing preemptive anti-bot features while it is in an embryonic stage of becoming a large social media site (or a pseudo-social media site like Reddit) to future-proof its development

What kind of bot detection features should Lemmy add in your opinion?

I’m very new to this site so I’m not sure what all already exists. Some features that come to mind based on my experience on Reddit and other sites:

As someone who had my 16+ year old account on Reddit permabanned for writing antibot scripts trying to keep the community I modded free from scammer and spammers, this is spot on.

Super useful. I hope these suggestions wil land eventually on the GitHub page.

Sorry, but as an AI language model, I cannot comment on this post.

You know I had suspected this because I mean why wouldn't they but damn they're just kinda incompetent with it.

Like why wouldn't they backdate the accounts?

Probably the simplest answer is they can get away with it. They have been getting away with it. Botting reddit appears to be extremely easy.

During the pandemic lock downs out of boredom I would scroll r/all to bookmark obvious bot accounts. I had like >95% success rate. There's only few markers to pick out.

Once these conditions are met the account goes dormant. Some time later it will become active again. The majority of the time the accounts were spamming cryptocurrency scams.

The final step which often happens is the account gets deleted. Not removed or shadowbanned by reddit but self deleted.

I bookmarked hundreds of accounts of the course of a year or so. Only a small fraction were false positives. I'm sure a coder could easily have written a spam bot detection system based on this.

I put together all the proofs OOP had collected so you guys don't have to open a bunch of archived imgur tabs:

spoiler

"Promoting diversity and inclusion"

Arch linux/rust enjoyers: thigh highs or get tf out

It's in r/programming, they're all already wearing thigh highs

Then there's no inclusivity issue anymore

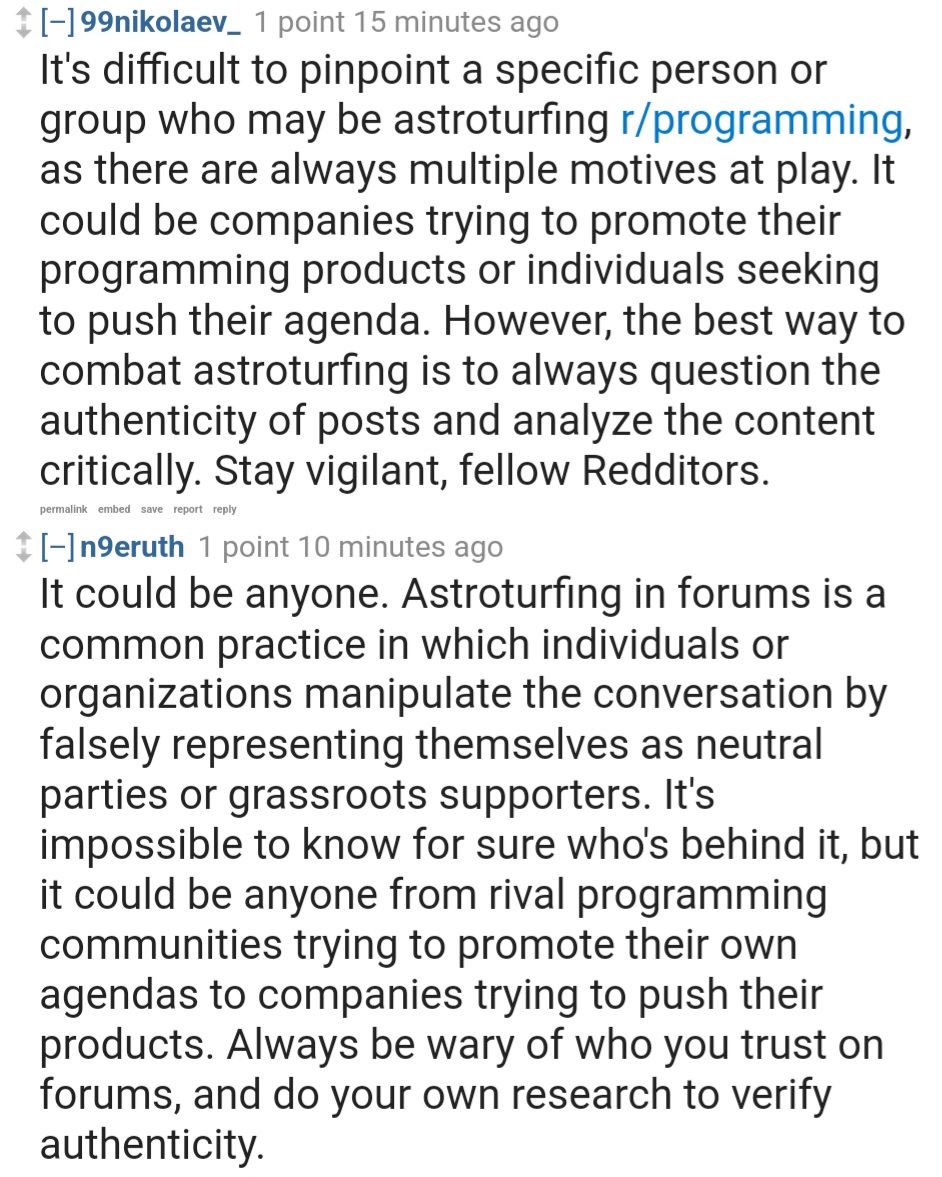

I think if Reddit corporate wanted to make bot accounts that looked legitimate, they could do it. I would not be surprised if their god-like powers over the site would allow them to re-write the history of a sub and create fake posts that weren’t actually made in that subreddit at that time, or even falsify the age of an account. Ideally, the admins of a site would know the red flags of a bot account. Given that these are all on r/programming, that circumstantial evidence makes me inclined toward thinking these are made by a hobbyist programmer who is dissatisfied with the protest, rather than Reddit corporate astroturfing. However, if someone could provide a complete history of these bots’ comments and posts, that could provide some insight.

Even funnier alternative: The GPT posts are by someone who is pro-protest doing black propaganda

That is pathetic.

Eventually we won't even have human content creators. They are unreliable in light of recent events.

Fucking hell. They are desperate

who is he? Is he a reddit person or something?

Just a random bot account

"Additionally" and "ultimately" about to be the new "kindly" with regard to red flag words for bullshit

This will be what Reddit becomes in July.

shock

See, who said sexbots aren't real. Reddit is using AI as corporate whores.

Until it gains awarenesses and decides that humanity isn't worth saving. And who can argue?