this post was submitted on 09 Mar 2024

545 points (96.0% liked)

memes

10485 readers

2770 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

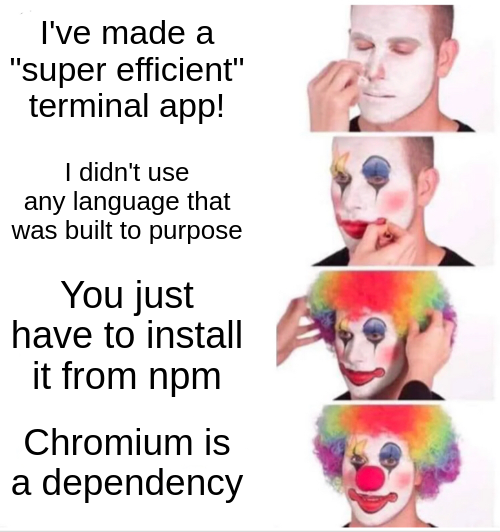

I saw a terminal app a few weeks ago that had AI INTEGRATION of all things.

Genuinely, fuzzy search and autocomplete is a great application of "AI" (machine learning algorithms).

They just need to stop branding it as AI and selling everything they feed the models....

Hopefully that day is soon what with those 1-bit models I've been hearing about. I'd be all for that, but I'll be damned if I'll be putting an OpenAI key into my terminal lol.

Do those really require ML? For an e-commerce with millions of entries maybe, but for a CLI I don't see it.

"need"? Of course not. Though I do see it being capable of much more sophisticated autocomplete. Like a tab-complete that is aware of what you've already typed in the command and gives you only compatible remaining flags, or could tab-complete information available in the environment, like recognize it's running in Kubernetes and let you tab through running hosts or commands that'd make sense from 'here', etc, etc.

Sure, it's all things a very nice and complicated algorithm could do, but ... that's all "AI" thus far. There have been zero actual artificial intelligences created.

So I didn't do it in the CLI direct, but I had a whole lot of files in a collection that obviously had duplicates.

So I first used fdupes, which got a lot of them. But there were a lot of duplicates still. I noted a bunch were identifiable by having identical file size, but just some different metadata, so I made a quick work of presenting only files with identical stuff and went about reviewing and deleting.

Then I still see a lot of duplicates, because the metadata might be slightly different. Sizes were close, but non dupes also were close. I might have proceeded to write a little something to strip it the metadata to normalize, but decided to feed it to an LLM and ask to identify likely duplicates. It failed to find them all, and erroneously declared duplicates, but it did make the work go faster. Of course in this scenario a missed duplicate isn't a huge deal, so I had to double check their results and might have missed some things, but good enough for the effort.

Sometimes my recall isn't quite good enough for ctrl-r, but maybe an LLM could do better. Of course a better "search engine" also could do well. Also a mind numbingly obvious snippet could be generated without the tedium. Again, having to be careful to reviee because the LLMs are useful, but unreliable.

But shouldn't it be feature of the shell (extension), not terminal emulator app?

Not sure what you mean. It's already a completely superfluous and additional feature. It "should" execute completely separate of everything regardless of what integrations it has.

Though if it doesn't yet exist as a separate thing to hook in to (and it doesn't), it's got to execute somewhere. Makes sense it'd show up as a canned extension or addition to something before it'd show up as a perfectly logically integrated tool.

Terminal emulator is the window, the tabs, integration with your desktop, etc.

Shell is more complicated but TLDR is this is everything showing in your terminal window by default, the base program you use that runs other programs. The prompt showing current user, saving history, coloring the input, basic editing keyboard shortcuts, etc.

By having this AI integrations in a terminal emulator we are very much limiting ourselfs. It would look more fancy in popup windows, but it won't work over remote connections and not be as portable.

Usually when we do some smart functions like autocomplete, fuzzy search or integrations like that we do it as an shell (fish, bash, zsh) extension, then it will work on any emulator and even without a GUI.

Yea, I agree it 'should' be integrated in a more general way. Though my point is from the dev's perspective: Why go through the extra effort to 'properly' do it if it is an unproven tool many people don't want?

Not saying it should stay there, just saying it makes sense it showed up somewhere less sensical than the ideal implementation.

Warp.dev! It’s the best terminal I’ve used so far, and the best use of AI as well! It’s extremely useful with some AI help for the thousands of small commands you know exist but rarely uses. And it’s very well implemented.

I don't understand what is the benefit here over a terminal with a good non-LLM based autocomplete. I understand that, theoretically, LLMs can produce better autocomplete, but idk if it is really that big of a difference with terminal commands. I guess its a small shortcut to have the AI there to ask questions, too. It's good to hear its well implemented, though.

There are two modes of AI integrations. The first is a standard LLM in a side panel. It’s search and learning directly in the terminal, with the commands I need directly available to run where I need them. What you get is the same as if you used ChatGPT to answer your questions, then copied the part of the answer you needed to your terminal and run it.

There is also AI Command Suggestion, where you’ll start to type a command / search prefixed by # and get commands directly back to run. It’s quite different from auto-complete (there is very good auto-complete and command suggestion as well, I’m just talking about the AI specific features here).

https://www.warp.dev/warp-ai

It’s just a convenient placement of AI at your fingertips when working in the terminal.

closed source sadly :/

Alas. They have said they plan to open some of the source and potentially everything, but it’s little progress.

They recently ported to Linux, which I think will give them much more negative feedback here, so hopefully with more pressure they’ll find the correct copy left license and open up their source to build trust.