This is hilarious, but also: how could anyone develop such a tool and not at least test it out on their own images? Someone with a public persona no less! Boggles my mind.

LGBTQ+

I mean, I bet they did, but you can't test it with all the photos ever taken of you. Someone probably tried dozens of photos to get this result. Which, to be clear, I admire.

Well - in this case it's the photo she posted herself, to announce the app.

Wow

They did, they know it doesn't work, but they are already too far down in the money hole. Gotta grift and bullshit and spread bigotry until they make back the money.

Edit: Some words

The false result is from some unrelated gender detection tool that has nothing to do with the app. Nothing here indicates the actual app makes the same error.

The post is basically saying "this thing doesn't work because I tried something else and it didn't work".

Heat emissions.

From a picture.

Hold on there's a ringing in my ears... Yeah yeah that's my bullshit alarm going off.

The heat emission is the smoke they're trying to blow up my ass.

Could be analysing 4 band imagery with an NIR layer. But then that usually comes from satellite imagery so it would make identifying gender challenging. I'd struggle with just a grainy image of the top of someone's head, even if I knew how warm it was.

Well, Big Shaq told us all that Man's not hot.

So because she didn’t check herself, you might say she wrecked herself.

Maybe her transphobia is just an attempt to pass better?

Ah straight out of the Dictatorship Surviour's Guide for Middle Management playbook.

well if it is 99.85% accurate, maybe she is self hating and hiding

Jenny: It's 99.85% accurate!

App: 97.61% certain Jenny is a dude.

priceless

What’s the play here? Does she not know that people upload highly inaccurate or blatantly fake photos to dating sites all the time?

What problem does this solve?

The problem that right wing fuckwits always need somebody to hate and discriminate against.

The problem it solves is that she needs plenty of money with little effort and morals are not a limiting factor. And what Diplomjodler3 said.

OK im starting to have doubts that this is legit. Looks like OP (or OOP, idk) just found a classifier which misclassified that image. Nothing I'm seeing indicates that it's the classifier used for her stupid app.

I fed it a pre-HRT pic and got "Woman, 56% confident". Lol. I guess it's kind of affirming to think a machine could see the real me back then?

I did the same pic that was used above and it said "Woman, 95% confident"

that's a solid disclaimer.

Personally I think a lot of TERFs became that way because they get misgendered a lot (by other humans mostly) especially when they dress butch. (She's not dressing butch here, and ftr I would have guessed female.)

They have decided it's transwomen who are at fault because their existence opens people's minds to the idea that a person's chromosomes might not match their clothing. People are just trying to address them as they're presenting.

The real problem is that there's still societal stigma attached to being misgendered. And still more societal stigma attached to being trans. Which they're internalizing, and then making worse, instead of working to recast it as a simple error in judgement by the person using the wrong pronoun.

The ones who are really at fault are those who for generations have purposely misgendered other people as a slur meant to wound. Who've cast femininity as weak and masculinity as ugly. Especially now when they should know better. They make it worse for those of us who might make an honest mistake or even just want a polite way to ask. If every first conversation started with telling each other one's pronouns, and every reference to an unintroduced person used they/them, you'd be able to ask without seeming to cast doubt on who they are.

And the other problem is the imbalances of power and privilege attached to gender in general of course. It would hit a lot different if a person's gender wasn't treated like a big part of who they are and where they stand in humanity.

Tldr: TERFs aren't just mad about being misgendered as men, (wrong but at least higher status) they're mostly mad about being misgendered as trans (wrong and lower status).

Stick, 🚲, "Trans did this!"

Interestingly, my first conversation with a teenager (I wasn't related to) in about 15 years began with asking about pronouns. It's very painless and easy to normalize as long as you don't have a reactionary person nearby.

Also, the kids are alright.

I assumed most terfs were cis het gender conforming people. Is there data showing that one way or another?

Cis yes, and I'm not sure about the rest. But saying they get mistaken for trans wasn't meant to imply they're mostly lesbian either. (Although I did stumble into a TERFy lesbian discussion on Reddit once back in the day and that's probably where I heard the part about more when looking butch.)

As a cis het nonterfy feminist old woman who sometimes wears dresses but never makeup, and a buzzcut, I don't care when someone mistakes my gender or sexuality. But that's probably because I yeeted a lot of my fucks along with my uterus many years ago.

Most are, yeah. IIRC, cis lesbians are the demographic most likely out of anyone to support trans rights outside of trans people themselves, by a large margin.

Tldr: TERFs aren’t just mad about being misgendered as men, (wrong but at least higher status) they’re mostly mad about being misgendered as trans (wrong and lower status).

Eh, TERFs in particular really lean on three things: "men bad/dangerous/evil/predators", "women good, but need protected from predation" and "you can't change which one you are, if you think you can it's either mental illness or a ploy."

It's why they focus so heavily on trans women (and generally ignore trans men), especially trans women in spaces that are traditionally women-only. It's why their language around the topic is so heavily drenched in the notion that trans women are "men in dresses" who are dressing up like that to get access to women's spaces - the whole presumption is that trans women are sexual predators in costume to get access to prey in more vulnerable situations.

According to the screenshot, it doesn't even call her a trans woman, it calls her a man. Presumably because man and woman are the only options on her little TERF world.

The AI probably saw that massive boner in her pants and got confused.

As funny as it is, I don’t think people should be uploading their images to this app. Maybe it’s hilariously wrong because it’s trying to data mine?

Yup if you give information to a company it's now theirs. The old adage about being the product if you're not paying no longer applies. Now you are the product even if you're paying.

They can always tell!

I wonder if the AI is detecting that the photo is taken from further away and below eye level which is more likely for a photo of a man, rather than looking at her facial characteristics?

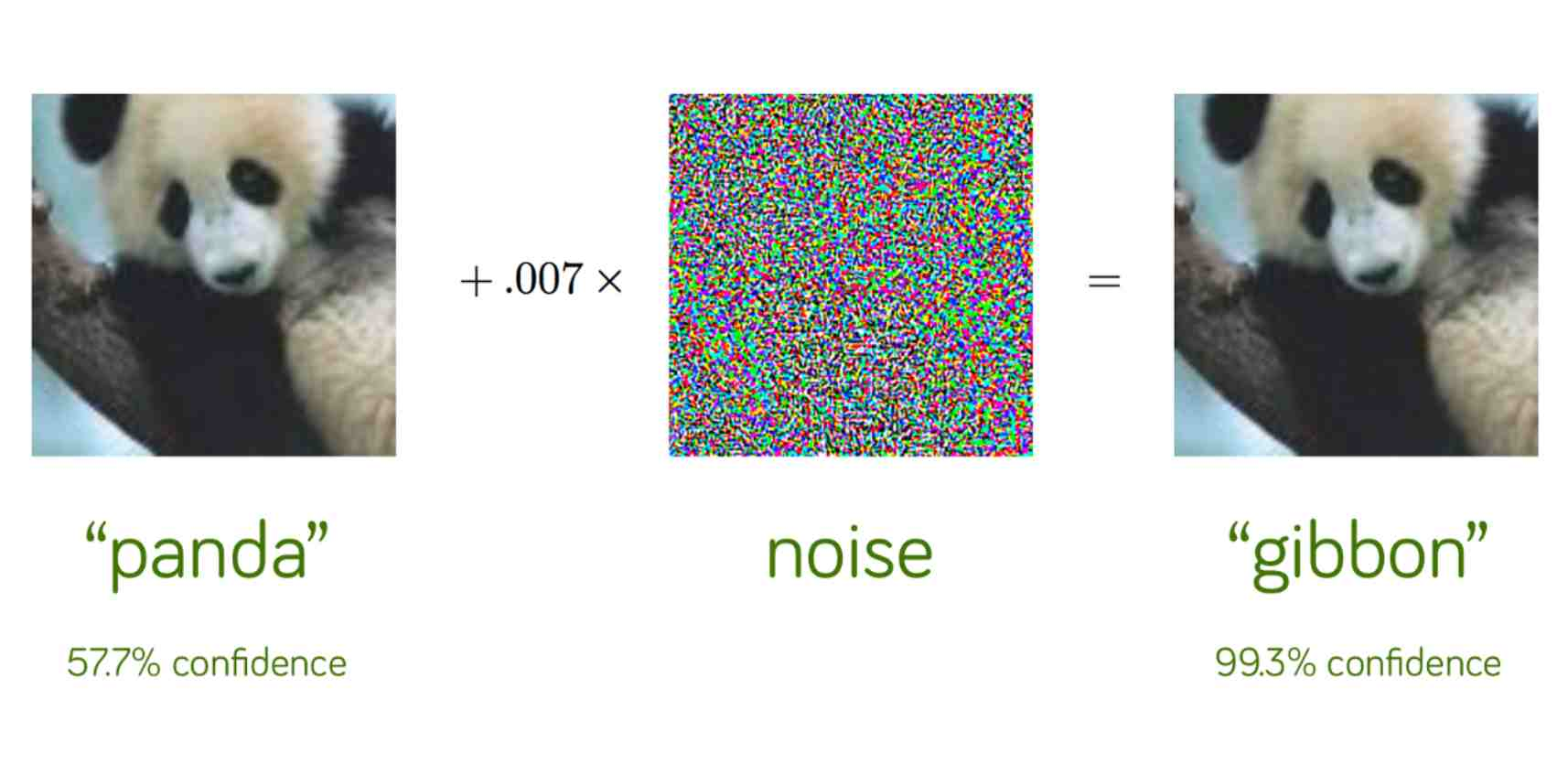

It's possible to manipulate an image in a way that the original and the new one are indistinguishable to the human eye, but the AI model gives completely different results.

Like this helpful graphic I found

Or... edit the HTML...

You think someone would do that? Just go on the internet and lie?

Yeah, this is a valid point, if this is the exact case or not I don't know, but a lot of people don't realize a lot of the weird biases that can appear in the training data.

Like that AI trained to detect ig a mole was cancer or not. A lot of the training data that was cancer had rulers in them. So the AI learned rulers are cancerous.

I could easily see something stupid like angle the picture was taken from being something the AI erroniously assumed to be useful for determining biological sex in this case.

Lol it was 85% confident I was "female"

We are all Trans Women on this blessed day.

Half of my images are around 50% confidence that I look like I'm a man

All other images lead to 98% confidence in me beeing a women.

All of my test-pics were made pre-HRT, and while my style makes it obvious that I don't identify as masculine (or am GNC+Gay) my face (unfortunately) screams was a pre-HRT AMAB.

That AI is totally shit

They said it includes heat emissions. Which is impossible without an IR camera that everyone totally has. I love it when bullshit tech charlatans tell on themselves.

Who uses their real picture in a dating app anyways?

/s

Ah bless, they've automated it now.