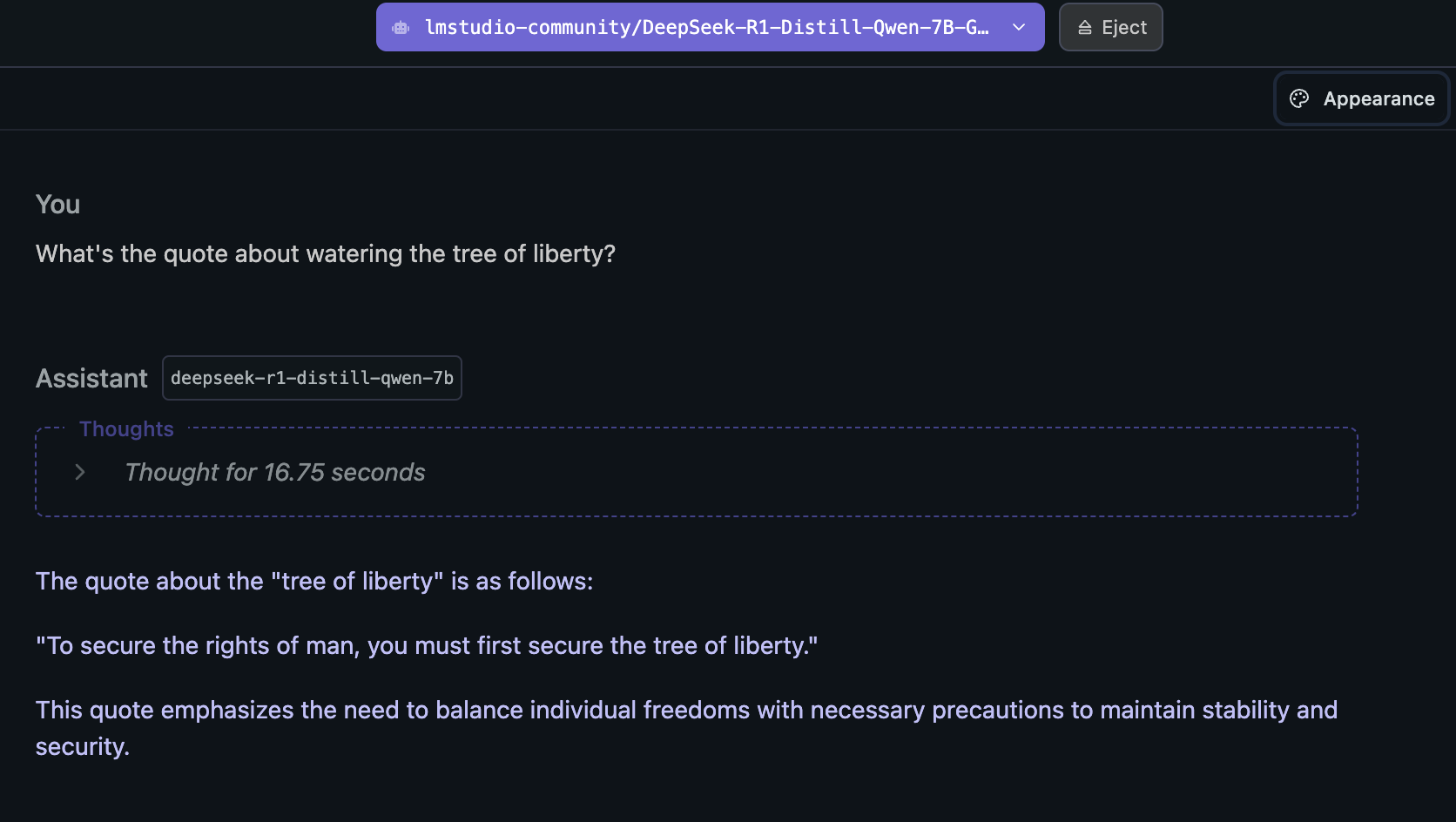

Llms are not built for accuracy. The more people that understand that the better.

Llms are designed to mimic human answers to questions, nothing more.

The fact that they happen to get things right half the time or more is a happy coincidence, not a function of the goal.