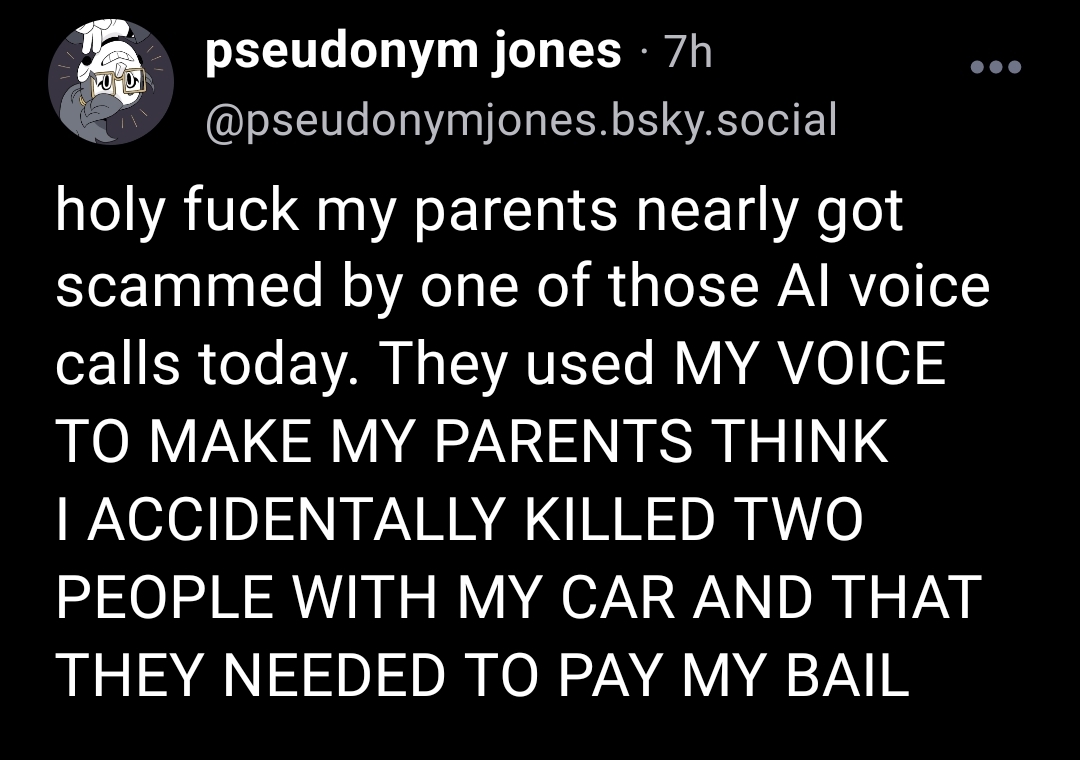

Are we sure it's AI?

I've heard of this scam happening maybe a decade ago with my extended family. The voice was a real person overseas with a lot of exp tricking grandparents. Scammers only had basic information.

They act as a freaked out kid and the victim gets roped in. They scam for thousands of dollars each time, even succeeding a few times a day would net a big profit. Also cell connections are low fidelity, I bet that aids their ability to trick the victim.