The network transparency thing is no longer a limitation with Wayland btw, thanks to PipeWire and Waypipe.

That wasn't the point I was trying to make though. :)

Chrome(ium) still doesn't run natively under Wayland by default - you'll need to manually pass specific flags to the executable to tell it to use Wayland. See: https://wiki.archlinux.org/title/chromium#Native_Wayland_support

Firefox also needed manual flags, but not anymore - Wayland support is enabled by default since version 121, released around three months ago. But some distros had enabled Wayland for Firefox much before that, Fedora being one of them.

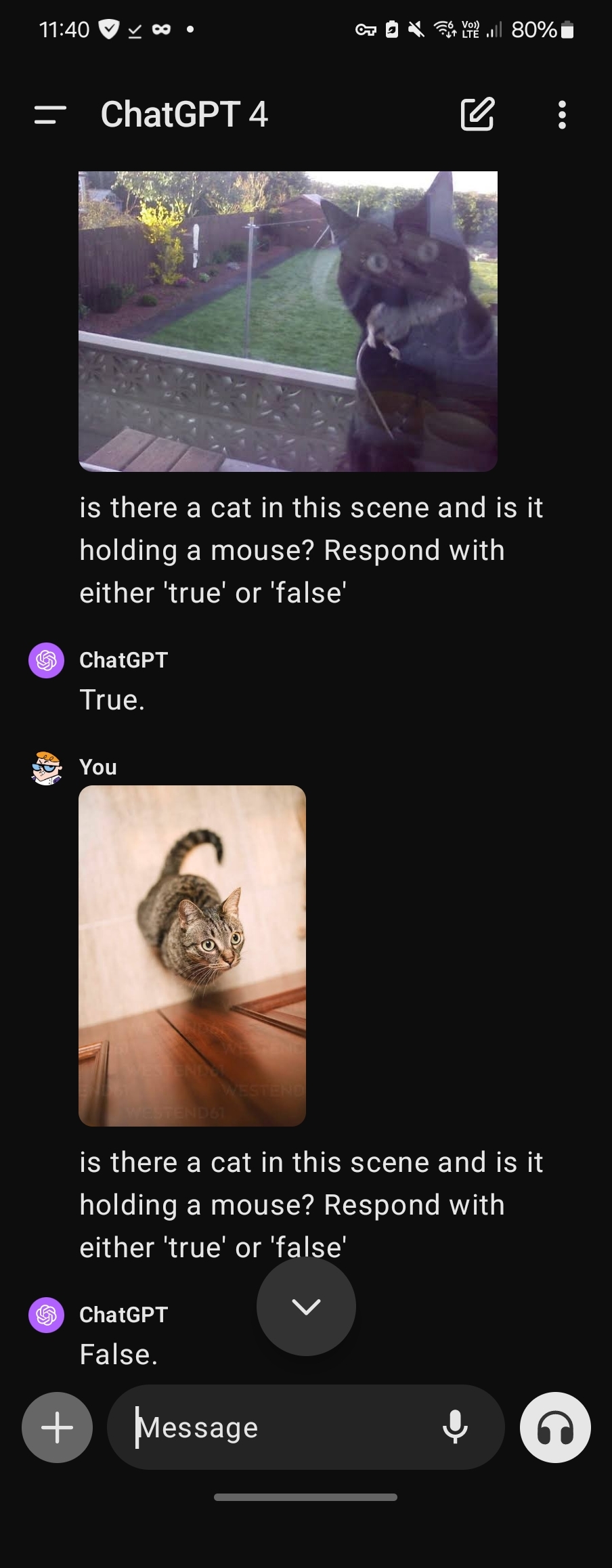

An easy "cheat" way to do it would be to use ChatGPT 4, you can submit a picture and ask it a question like "is there a cat in this scene and is it holding a mouse? Respond with either 'true' or 'false'".

Python examples here: https://platform.openai.com/docs/guides/vision

Thanks, this makes sense. Would also help keep longer conversations going (one of the issues with the daily threads being that you were less inclined to go back to an older thread to check for new comments).

In addition to the other replies, one of the main draws of Wayland is that it's much less succeptible to screen-tearing / jerky movements that you might sometimes experience on X11 - like when you're dragging around windows or doing something graphics/video heavy. Wayland just feels much smoother and responsive overall. Other draws include support for modern monitor/GPU features like variable refresh rates, HDR, mixed DPI scaling and so on. And there's plenty of stuff still in the works along those lines.

Security is another major draw. Under X11, any program can directly record what's on your screen, capture your clipboard contents, monitor and simulate keyboard input/output - without your permission or knowledge. That's considered a huge security risk in the modern climate. Wayland on the other hand employs something called "portals", that act as a middleman and allow the user to explicitly permit applications access to these things. Which has also been a sore point for many users and developers, because the old way of doing these things no longer works, and this broke a lot of apps and workflows. But many apps have since been updated, and many newer apps have been written to work in this new environment. So there's a bit of growing pains in this area.

In terms of major incompatibilities with Wayland - XFCE is still a work-in-progress but nearly there (should be ready maybe later this year), but some older DE/WMs may never get updated for Wayland (such as OpenBox and Fluxbox). Gnome and KDE work just fine though under Wayland. nVidia's proprietary drivers are still glitchy/incomplete under Wayland (but AMD and Intel work fine). Wine/Proton's Wayland support is a work-in-progress, but works fine under XWayland.

Speaking of which, "XWayland" is kinda like a compatibility layer which can run older applications written for X11. Basically it's an X11 server that runs inside Wayland, so you can still run your older apps. But there are still certain limitations, like if you've got a keyboard macro tool running under XWayland, it'll only work for other X11 apps and not the rest of your Wayland desktop. So ideally you'd want to use an app which has native Wayland support. And for some apps, you may need to pass on special flags to enable Wayland support (eg: Chrome/Chromium based browsers), otherwise it'll run under XWayland. So before you make the switch to Wayland, you'll need to be aware of these potential issues/limitations.

Thanks! Yep I mentioned you directly seeing as all the other other mods here are inactive. I'm on c/linux practically every day, so happy to manage the weekly stickies and help out with the moderation. :)

Incorrect. "Open Source" also means that you are free to modify and redistribute the software.

Not necessarily true - that right to modify/redistribute depends on the exact license being applied. For example, the Open Watcom Public License claims to be an "open source" license, but it actually doesn't allow making modifications. This is also why we specifically have the terms "free software" or "FOSS" which imply they you are indeed allowed to modify and redistribute.

I would recommend reading this: https://www.gnu.org/philosophy/open-source-misses-the-point.en.html

As an Arch user (btw), that's rarely an issue thanks to the AUR and it's vast package pool :) But on the very rare occasion that it's not there on the AUR but available as a deb, I can use a tool called Debtap to convert the .deb to the Arch's .tar.zst package.

For rpm-based distros like Fedora and OpenSUSE etc, there's a similar tool called alien that can convert the .deb to .rpm.

In both instances though, dependencies can be a pain, sometimes you may need to trawl thru the dependencies and convert/install them, before to do the main package.

Ideally though, you'd just compile from source. It used to be a daunting task in the old days, but with modern CPUs and build systems like meson, it's not really a big deal these days. You'd just follow the build instructions on the package's git repo, and usually it's just a simple copy-paste job.

Finally, if these packages are just regular apps (and not system-level packages like themes etc), then there are multiple options these days such as using containers like Distrobox, or installing a Flatpak/Appimage version of that app, if available.

I'm not super familiar with ZFS so I can't elaborate much on those bits, but hardlinks are just pointers to the same inode number (which is a filesystem's internal identifier for every file). The concept of a hardlink is a file-level concept basically. Commands like lsblk, df etc work on a filesystem level - they don't know or care about the individual files/links etc, instead, they work based off the metadata reported directly by the filesystem. So hardlinks or not, it makes no difference to them.

Now this is contrary to how tools like du, ncdu etc work - they work by traversing thru the directories and adding up the actual sizes of the files. du in particular is clever about it - if one or more hardlinks to a file exists in the same folder, then it's smart enough to count it only once. Other file-level programs may or may not take this into account, so you'll have to verify their behavior.

As for move operations, it depends largely on whether the move is within the same filesystem or across filesystems, and the tools or commands used to perform the move.

When a file or directory is moved within the same filesystem, it generally doesn't affect hardlinks in a significant way. The inode remains the same, as do the data blocks on the disk. Only the directory entries pointing to the inode are updated. This means if you move a file that has hardlinks pointing to it within the same filesystem, all the links still point to the same inode, and hence, to the same content. The move operation does not affect the integrity or the accessibility of the hardlinks.

Moving files or directories across different filesystems (including external storage) behaves differently, because each filesystem has its own set of inodes.

-

The move operation in this scenario is effectively a copy followed by a delete. The file is copied to the target filesystem, which assigns it a new inode, and then the original file is deleted from the source filesystem.

-

If the file had hardlinks within the original filesystem, these links are not copied to the new filesystem. Instead, they remain as separate entities pointing to the now-deleted file's original content (until it's actually deleted). This means that after the move, the hardlinks in the original filesystem still point to the content that was there before the move, but there's no link between these and the newly copied file in the new filesystem.

I believe hardlinks shouldn't affect zfs migrations as well, since it should preserve the inode and object ID information, as per my understanding.

In addition to static linking, you can also load bundled dynamic libraries via RPATH, which is a section in an ELF binary where you can specify a custom library location. Assuming you're using gcc, you could set the LD_RUN_PATH environment variable to specify the folder path containing your libraries. There may be a similar option for other compilers too, because in the end they'd be spitting out an ELF, and RPATH is part of the ELF spec.

BUT I agree with what @Nibodhika@lemmy.world wrote - this is generally a bad idea. In addition to what they stated, a big issue could be the licensing - the license of your app may not be compatible with the license of the library. For instance, if the library is licensed under the GPL, then you have to ship your app under GPL as well - which you may or may not want. And if you're using several different libraries, then you'll have to verify each of their licenses and ensure that you're not violating or conflicting with any of them.

Another issue is that the libraries you ship with your program may not be optimal for the user's device or use case. For instance, a user may prefer libraries compiled for their particular CPU's microarchitecture for best performance, and by forcing your own libraries, you'd be denying them that. That's why it's best left to the distro/user.

In saying that, you could ship your app as a Flatpak - that way you don't have to worry about the versions of libraries on the user's system or causing conflicts.

The Linux kernel supports reading NTFS but not writing to it.

That's not true. Since kernel 5.15, Linux uses the new NTFS3 driver, which supports both read and write. And performance wise it's much better than the old ntfs-3g FUSE driver, and it's also arguably better in stability too, since at least kernel 6.2.

Personally though, I'd recommend being on 6.8+ if you're going to use NTFS seriously, or at the very least, 6.2 (as 6.2 introduces the mount options windows_names and nocase). @snooggums@midwest.social

Uh oh, you better watch out for those feijoa-stealing whores!