Heard fireworks go off at 2:11AM in Welly... very random. Anyone know what could be the reason?

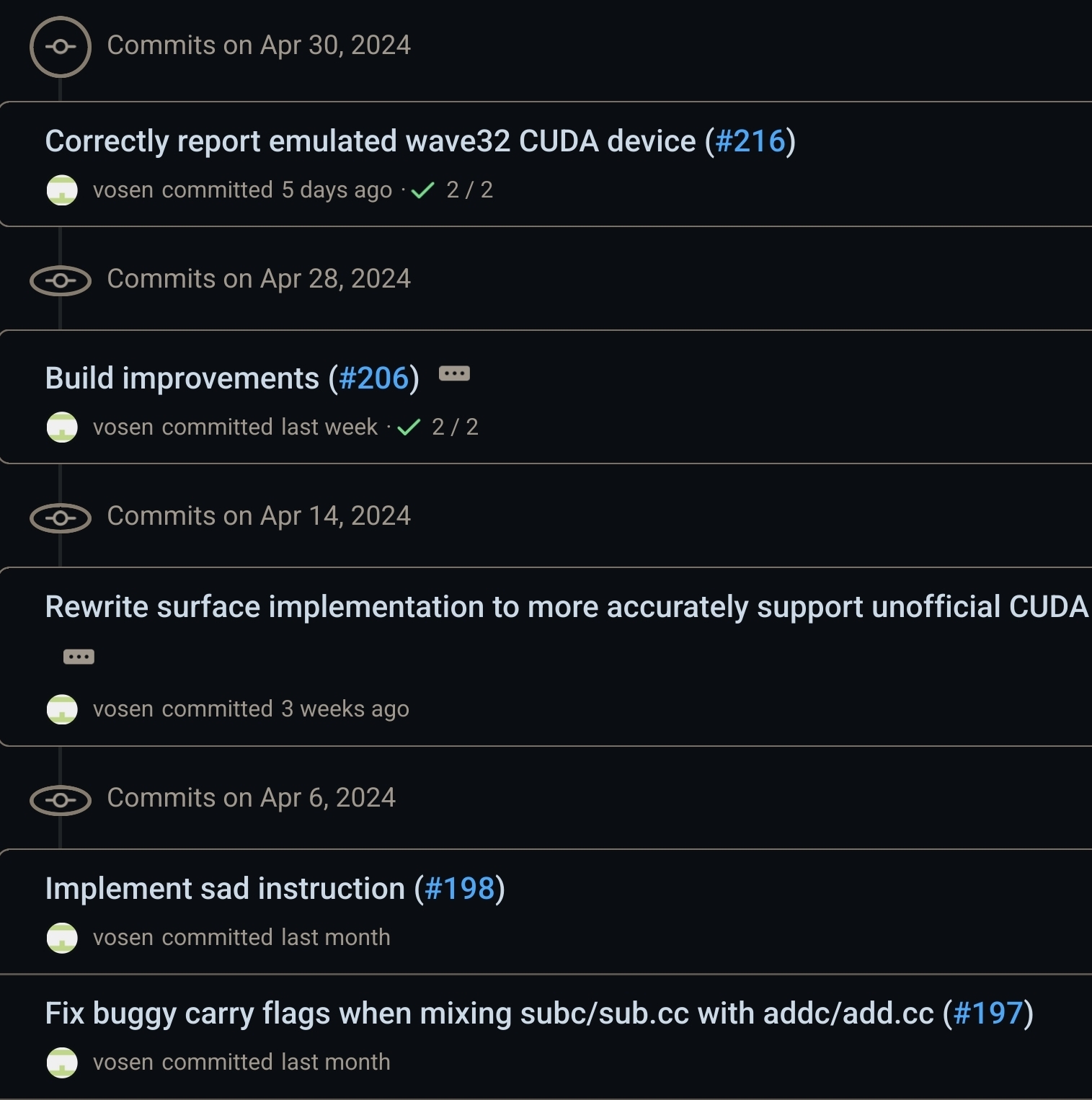

I based my statements on the actual commits being made to the repo, from what I can see it's certainly not "floundering":

In any case, ZLUDA is really just a stop-gap arrangement so I don't see it being an issue either way - with more and more projects supporting AMD cards, it won't be needed at all in the near future.

And this is one of the reasons why I don't like 'em. They're way too overengineered, IMO. Which is weird because so many mk enthusiasts prefer minimal setups. In my case for instance, I just have a braided Type-C cable running straight from my board to the back of my desk. Just a simple, straight line. Easy to connect/disconnect/clean/maintain/replace. Minimal. I personally don't see why/how an aviator cable could improve either the aesthetics or the functionality. In fact, I can only think of downsides.

It's not "optimistic", it's actually happening. Don't forget that GPU compute is a pretty vast field, and not every field/application has a hard-coded dependency on CUDA/nVidia.

For instance, both TensorFlow and PyTorch work fine with ROCm 6.0+ now, and this enables a lot of ML tasks such as running LLMs like Llama2. Stable Diffusion also works fine - I've tested 2.1 a while back and performance has been great on my Arch + 7800 XT setup. There's plenty more such examples where AMD is already a viable option. And don't forget ZLUDA too, which is being continuing to be improved.

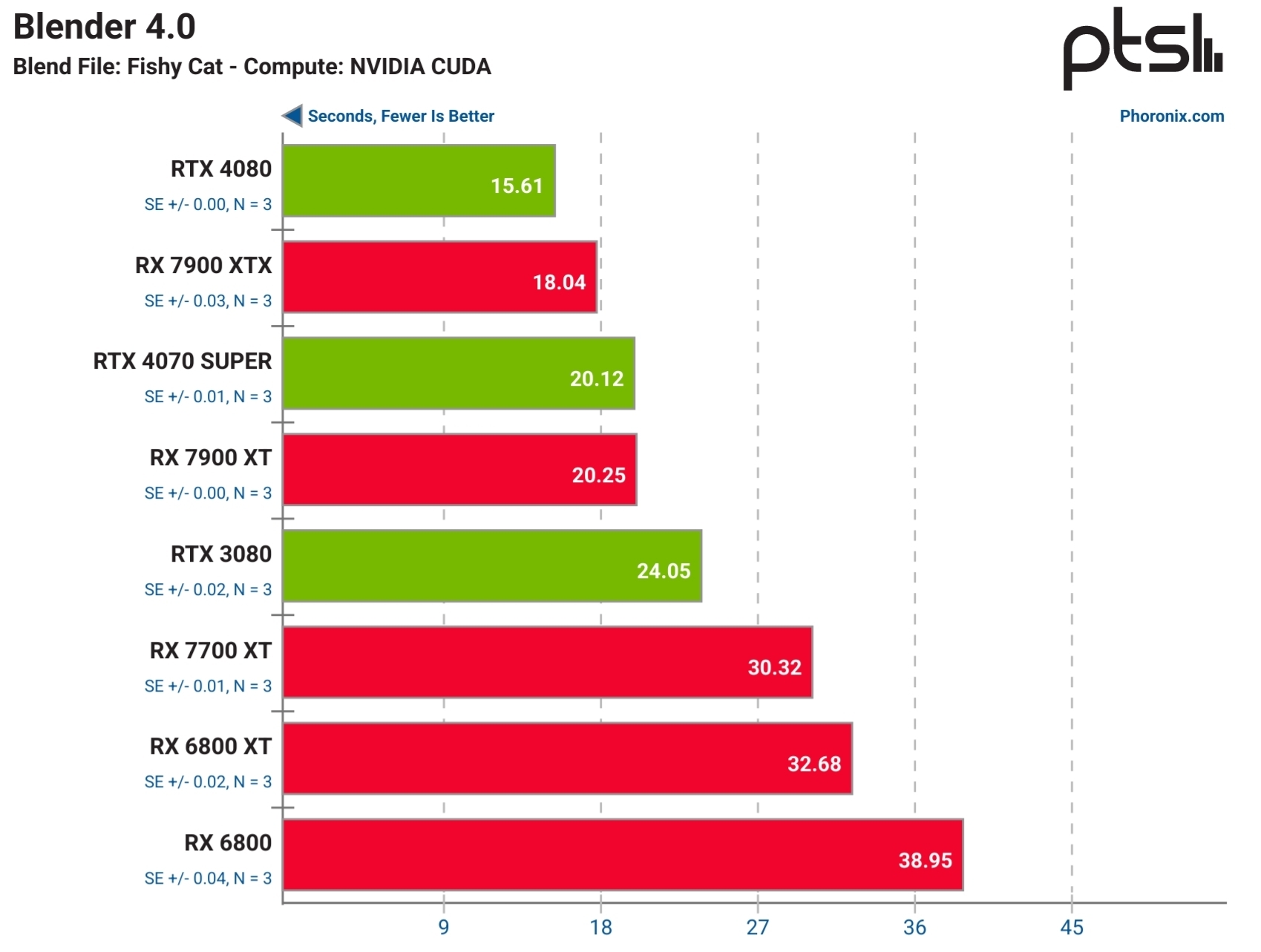

I mean, look at this benchmark from Feb, that's not bad at all:

And ZLUDA has had many improvements since then, so this will only get better.

Of course, whether all this makes an actual dent in nVidia compute market share is a completely different story (thanks to enterprise $$$ + existing hw that's already out there), but the point is, at least for many people/projects - ROCm is already a viable alternative to CUDA for many scenarios. And this will only improve with time. Just within the last 6 months for instance there have been VAST improvements in both ROCm (like the 6.0 release) and compatibility with major projects (like PyTorch). 6.1 was released only a few weeks ago with improved SD performance, a new video decode component (rocDecode), much faster matrix calculations with the new EigenSolver etc. It's a very exiting space to be in to be honest.

So you'd have to be blind to not notice these rapid changes that's really happening. And yes, right now it's still very, very early days for AMD and they've got a lot of catching up to do, and there's a lot of scope for improvement too. But it's happening for sure, AMD + the community isn't sitting idle.

The best option is to just support the developer/project by the method they prefer the most (ko-fi/patreon/crypto/beer/t-shirts etc).

If the project doesn't accept any donations but accepts code contributions instead (or you want to develop something that doesn't exist), you can directly hire a freelancer to work on what you want, from sites like freelancer.com.

Run journalctl -f before starting Lutris, then launch Lutris and check the journalctl for any errors.

Hmm, so I've had a look and it seems like Xournal++ only supports x86_64. Which means that if you get the Snapdragon version, you'll need run it using an x86 emulator like FEXEmu or Box64, and this will affect the performance and may also introduce compatibility issues. So you'll need to do your own research and find out if someone's managed to run it on ARM / Snapdragon 7c, and if there's any issues etc.

You could get the Celeron version instead, but personally I can't recommend a Celeron to anyone in good faith, so you'll have to make your own decision sorry.

doasis relativly simple (a few hundred LOC)

Actually it's close to 2k lines of code (1,946 to be exact). But yes, it's certainly a lot simpler than sudo (132k).

Forget Linux for a second. What you need to be aware is that both the variants come with only 4GB soldered-on RAM and eMMC storage. That means, even if you do manage to get Linux going on them, it's going to be super slow for any sort of practical Web/GUI needs. 4GB RAM is barely enough to run a browser these days, and if you tack on a full-fledged DE and multitasking with other apps, you'll be pushing memory pages to the disk (ie, swapping). And when that happens, you'll really feel the slowness. Trust me, you don't want to be swapping to eMMC - that's super old tech, something like 3x slower than UFS, which in turn a LOT slower than m.2 NVMe (the current standard used in "proper" laptops/convertibles).

Also, consider this for perspective - even budget smartphones these days come with at least 6GB RAM and UFS storage. So this laptop/convertible - a device meant for productivity - is a complete ripoff.

If money is an issue, then just buy a used laptop (from eBay, or whatever you guys use there). If you're aiming for good Linux compatibility then ThinkPads are a safe bet. But since you're after a Surface-like device, then you could just get any older Surface device. Why settle for an imitation when you can get the real thing? In any case, most older x86 laptops from mainstream brands should work fine in Linux in general, just do a google for it to see if there are any quirks or issues.

Regardless of your choice, avoid the Duet 3. 4GB RAM is completely unacceptable for a laptop in 2024.

Are you on Wayland? If so, try setting the theme using Nwg-Look instead. If not, stick with LXappearance. Also btw I just found out that LXappearance doesn't apply GTK settings directly, you'll need log off and on for the change to take place, if you haven't done that already.

Also, what's the DE that you're using? Because if you're not on Gnome (from the sounds of it, you're on LXQt?), you may need to install certain GTK theme engine dependencies like gnome-themes-extra and gtk-murrine-engine. Reboot (or logoff/on), and try again.

Also worth trying a different theme such as Breeze or Arc. Maybe try a light variant as well.

If all else fails, open a bug report on the Lutris github.

Most likely that's a theme issue. Close Lutris, change back to the default Adwaita theme and try again.

Since you're on Linux, it's just a matter of installing the right packages from your distros package manager. Lots of articles on the Web, just google your app + "ROCm". Main thing you gotta keep in mind is the version dependencies, since ROCm 6.0/6.1 was released recently, some programs may not yet have been updated for it. So if your distro packages the most recent version, your app might not yet support it.

This is why many ML apps also come as a Docker image with specific versions of libraries bundled with them - so that could be an easier option for you, instead of manually hunting around for various package dependencies.

Also, chances are that your app may not even know/care about ROCm, if it just uses a library like PyTorch / TensorFlow etc. So just check it's requirements first.

As for AMD vs nVidia in general, there are a few places mainly where they lagged behind: RTX, compute and super sampling.

For RTX, there has been improvements in performance with the RDNA3 cards, but it does lag behind by a generation. For instance, the latest 7900 XTX's RTX performance is equivalent to the 3080.

Compute is catching up as I mentioned earlier, and in some cases the performance may even match nVidia. This is very application/library specific though, so you'll need to look it up.

Super Sampling is a bit of a weird one. AMD has FSR and it does a good job in general. In some cases, it may even perform better since it uses much simpler calculations, as opposed to nVidia's deep learning technique. And AMD's FSR method can be used with any card in fact, as long as the game supports it. And therein lies the catch, only something like 1/3rd of the games out there support it, and even fewer games support the latest FSR 3. But there are mods out there which can enable FSR (check Nexus Mods) that you might be able to use. In any case, FSR/DLSS isn't a critical thing, unless you're gaming on a 4K+ monitor.

You can check out Tom's Hardware GPU Hierarchy for the exact numbers - scroll down halfway to read about the RTX and FSR situation.

So yes, AMD does lag behind in nVidia but whether this impacts you really depends on your needs and use cases. If you're a Linux user though, getting an AMD is a no-brainer - it just works so much better, as in, no need to deal with proprietary driver headaches, no update woes, excellent Wayland support etc.